I know that there are plenty of teachers using standards based grading in AP classes, but despite that I didn’t really know how I was going to implement it in my classroom. But I decided to give it a whirl, inspired by the technology at my disposal: the Ultimate Gamified Learning Goal Assessment Spreadsheet that I’ve been working on with the help of many others for close to a year now. But the tech was, of course, only the initial inspiration; the idea of keeping track of what they actually know in a much better way is my real inspiration and goal.

I decided that I would not try to change my assessment style, at least for now. I do take up occasional classwork assignments and warmups and use those to inform the scores on my standards (which I call Learning Goals), but the majority of my input is based on big summative tests that mimic the AP style. This is for three reasons:

- Selfish, but realistic: keeping track of lots of small assessments is difficult and time consuming, and I struggle with the organization it requires. I do better with a big pile and a strict deadline, which traditional tests allow me.

- Pedagogical: I do believe it is important for students to be able to show mastery of many different learning goals at once, and combine them together in complicated ways, which a large assessment covering many goals allows more easily than smaller ones designed to cover only one or two.

- Practical: this is an AP course, and part of how I am judged as a teacher (not formally, but informally) is how well prepared they are for the Big Day. The students need practice on exams that mimic the AP exam. Thus, my assessments remain in that style; several multiple choice questions (usually 10 or 12) with 3 or 4 multi-part free response questions.

We just completed our first unit on experimental design, so I now can report out on my first experience with SBG on a big AP-format test. It was an ordeal.

First, I had to align each question with the appropriate learning goals. I covered 14 different goals in this test (mostly taken directly from my textbook). Some were very straightforward alignments, but some were much more complicated; the multiple choice questions in particular are tricky, because often you needed to have a solid grasp of two or three of my goals to be able to ferret out the correct answer. I will not take the multiple choice away, but I may find a way for them to explain their answers in the future, so I can be more certain that I’m grading those goals correctly. This was an extremely valuable experience, however, because I was able to see which learning goals were getting the short end of the assessment stick and which were the big stars; I ended up having to switch out a couple of questions in this process to improve the balance of the assessment.

Actually grading the test was tricky. I made a table, organized by learning goal, that listed the connected problems and helped me keep track of scores as I graded the test. Here is an example.

This worked pretty well; next time I will probably put the actual wording of the learning goals on this if possible (to aid in reference and thinking). The hardest part was dealing with multiple choice questions; I ended recording all missed multiple choices as 1’s (out of 4) in the standards, but in a couple of cases where a LG was only assessed by one MC question, that ended up being kind of harsh. One of the free response questions also ended up connecting to something like 4 different learning goals, so one student who otherwise did well but REALLY struggled with that one ended up losing points in lots of places. I need to find a better, more fair and accurate way of dealing with these big multi-goal questions.

As I went, I ALSO graded the test in the style of the AP exam. Each MC question was counted as simply right or wrong, and the FR were graded using a rubric similar to the ones the AP uses, each question out of 4. This allowed me to create a raw AP score and estimate (very roughly) their scaled AP score. A curved version of this grade is essentially what I used last year, so this gave me an interesting value to compare to to see if my new system was giving at least somewhat similar results to what the AP exam might give me.

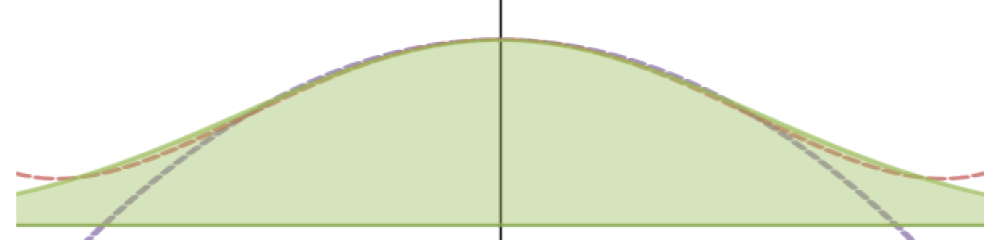

This is the scatterplot of my learning goal score average vs the calculated AP raw score. R^2 =.71. Not bad! The high outlier is the student I mentioned who struggled with the big multi-standard problem, and if you remove her data the R^2 rises to 0.9. So that’s good! My system differentiates sufficiently to be a reasonable approximator of AP scores.

It definitely added time and work to go through this process, but I think it will be worth it. The students mostly did very well on this test, but a few of them who need to work on some specific knowledge will now be armed to do that. And I will be better prepared to help them. A win for all.

I would love suggestions for other, easier ways to combine the AP Style with a standards-based system – please reach out to me if you have suggestions!

Great post. I also am teaching AP Stats using standards, but am somewhat helped by the book we are using. Stats: Modeling the World, by Bock, Velleman and DeVeaux, has very short chapters (like sections of a traditional high-school textbook), each of which is more-or-less about a single standard. I love a lot of things about this book, including its ridiculous readability, but maybe the best thing is that it has really excellent assessments. There are three different quizzes (open response similar to an AP question) for each chapter, and then 2 or 3 unit tests for each unit. Each unit test has 10 multiple choice and 4 to 6 open-response, very like an AP test. Pretty much every assessment is just what I would have written.

I’m also using Masteryconnect, which is a program for tracking learning standards. It’s pretty annoying to use and sometimes feels like it should still be in Beta release, but it has some excellent features. The best one is for grading multi-standard assessments. The job is front-loaded because I have to create an assessment in the software to match the one I gave, assigning standards to each question (which means I sometimes have to break up each free-response into several sub-questions). But once I’m done, I can just enter each question’s score, and the program populates each standard in the tracker with a grade from 1 to 4. (I can even scan bubble-sheets for the multiple choice problems.)

So my process is as follows. Teach a section, with classroom activities and homework. After a few days, give quiz A on that chapter. That populates the tracker for that standard. If students do poorly, they request extra homework or stay for help, and can then requiz another day (before or after school) or choose to wait until after they see how the unit test goes. The unit test mastery results replace the quizzes. So the quizzes really serve as formative assessment, and the test, where they have to integrate all that they have learned, trumps the quizzes. After the test, they may take another test (again before or after school, unless the entire class totally bombs it, when I reteach and retest everyone.

Upside: even the typically grade-obsessed AP students gradually stop focusing on grade and focus more on learning. EVERYthing can be re-assessed, so they have real reasons to keep trying until they understand.

Downside: TONS of grading. But unlike before, when I hated grading (it made me feel mean and disappointed and I would agonize about each point I deducted), now I don’t mind it.

Sorry so long.

Hi, do you have a list of your stats standards? would love to take a look! @bowmanimal

I’m essentially just using the Learning Objectives from TPS 5e this year. Word document is here: https://docs.google.com/a/harpethhall.org/uc?id=0B-C-lUvv4rQ4YnlrYUtyZzFpakE&export=download

I know that SBG normally uses a lot fewer standards than this, but I like the granularity. For me, SBG is less about a change of process and more about a change of analysis of knowledge. At least for now.