I’ve been learning a lot about the deep details of statistics lately, motivated partially by interest and partially by a deep desire not to accidentally put something in the CPM Statistics book that is wrong!

My current study is about bootstrapping confidence intervals. If you’ve never heard of bootstrapping, I’ll give you the introductory definition we used in our CPM book (or at least the pilot version – after the exploration in this blog post I’ll definitely be modifying it!)

I think this is a pretty good definition that gets to the heart of bootstrapping. You can play with bootstrapping yourself on a variety of websites: in the CPM text we use a problem sequence connected to the sampling distribution applet at http://shiny.mtbos.org/quantsamples but an easier to use option for just playing might be the Statkey bootstrapping applet. Bootstrapping has become extremely popular in introductory statistics courses partially because it is easy to understand and partially because it is fun! It is portrayed as being a better (or at least equal) option for confidence intervals than traditional normal-distribution-based inference, because it makes fewer assumptions about the population of interest, which theoretically makes it far less important that you check conditions on the population shape, sample size, and so on.

The problem is that it doesn’t seem to work as well as advertised, at least when it comes to dealing with problems associated with inference on means.

I started to discover the errors of my ways through a post on the AP Statistics Teachers community forum. Frequent poster Robert W Hayden mentioned some simulation studies that had been done on the bootstrap that made it clear that attaching a confidence level to a bootstrapped without reservations is not a good idea. Essentially, the bootstrapped confidence intervals actually capture the true mean significantly less often than a traditional T-interval for a wide variety of populations with samples sizes <=30. That is, they perform worse than traditional methods!

I was surprised by this (though now that I’ve had some time to think about it, I’m not sure I should have been.) I decided to recreate some of the simulation studies process using some R code of my own just to convince myself. You can see the code I used here but it is pretty computationally intensive – running it on the linked website will likely never finish (and may crash your browser). You can download and install Rstudio on your own computer (and install the “bootstrap” package) to run it yourself if you feel the need, but it takes an hour or two to complete even on a pretty hefty computer. I’m sure I could make it run faster, but eh.

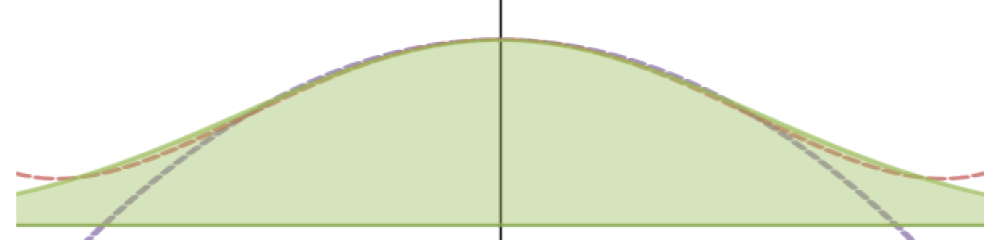

So what does the code do that is taking so long? It starts by generating three imaginary populations of 10000 elements each. One of them is approximately uniform (with a mean of 0.5), one of them is approximately normal (mean of 0), one of them is moderately left skewed, and one extremely left skewed (think income inequality graphs). It then draws 10,000 samples of size 10 from each one, then uses each of those 10,000 samples to create a bootstrap confidence interval by taking 5,000 bootstrap samples and finding the middle 95% range, calculating the proportion of the bootstrapped confidence intervals that correctly capture the true mean of the original population. It then repeats the process for samples of size 20 and size 30. So that’s 450 million samples that need to get drawn and math done on them – thus why it takes a while to run! (And what a world we live in where this only took a couple of hours on my laptop. In 1990, this would have taken about a month on a supercomputer!) 1

My output:

Uniform pop, n=10 hit rate: 0.9137 Uniform pop, n=20 hit rate: 0.9389 Uniform pop, n=30 hit rate: 0.9383 Normal pop, n=10 hit rate: 0.906 Normal pop, n=20 hit rate: 0.9277 Normal pop, n=30 hit rate: 0.9408 Moderate Left Skew, n=10 hit rate: 0.9014 Moderate Left Skew, n=20 hit rate: 0.9315 Moderate Left Skew, n=30 hit rate: 0.9405 Extreme Left Skew, n=10 hit rate: 0.8027 Extreme Left Skew, n=20 hit rate: 0.8554 Extreme Left Skew, n=30 hit rate: 0.8803 Bimodal, n=10 hit rate: 0.9205 Bimodal, n=20 hit rate: 0.9402 Bimodal, n=30 hit rate: 0.9429

These were all calculated with the middle 95% interval of the bootstraps, so in theory they should capture the true mean about 95% of the time. Turns out, they don’t. I didn’t calculate the hit rates of traditional t-intervals, but I’m sure the t-intervals would have done better in most cases.

So… bootstraps don’t solve the problems of small sample sizes with mean inference. So are they useful? Yes, they are. You can use bootstrapping to estimate the shape and size of sampling distributions for statistics that don’t follow nice patterns, like medians, variances, and IQRs. There also seems to be some evidence that they may outperform t-intervals for extremely skewed populations with large sample sizes (that’s my next simulation experiment!). There are also more sophisticated bootstrap techniques that apparently work better than this naive one. But certainly my definition above was too confident. It is not particularly safe to take the middle C% of a bootstrapped distribution and call it a C% confidence interval. Nor is it the best method available to find confidence intervals, at least for means, and probably not for things like medians either, though it might be the best option for medians available to introductory students.

Edit: New Results!

One possibility for where the bootstrap might still be a better choice for means than the t-distribution for means is in extremely skewed distributions but with larger sample sizes. I went ahead and ran an experiment comparing the T-distribution to bootstrapping with larger sample sizes and the Extreme Left Skew distribution I used above. Here’s my output:

Bootstrap, n=50 hit rate: 0.8991 T-dist, n=50 hit rate: 0.9012 Bootstrap, n=100 hit rate: 0.9153 T-dist, n=100 hit rate: 0.9144 Bootstrap, n=200 hit rate: 0.9234 T-dist, n=200 hit rate: 0.9239 Bootstrap, n=500 hit rate: 0.9459 T-dist, n=500 hit rate: 0.9454

So it seems that the bootstrap works essentially on par with the t-distribution for very skewed distributions with larger sample sizes. And it takes a LOT longer. So…not a great use. Basically, I’m settling on “there’s no real point to bootstrapping CIs for means” at this point, at least using this system to calculate the interval. It doesn’t seem to improve on the t-distribution at any point. My next experiment will involve medians. One shouldn’t technically use a t-distribution to estimate medians, but how well will it work? Obviously for symmetric populations it will work just as well as means, but skewed populations should be more interesting to compare to bootstrapped values.