I am not a statistician, really. I took AP Statistics in high school (but never the exam) and a single statistics class in college as part of my core curriculum, but I never went further. So teaching it (and co-writing a book about it) for me, has been interesting – my grasp of What is Beyond is not nearly as deep as it is for, say, algebra. I’ve had to do a lot more thinking, exploring, and studying on my own. What follows is a documentation of my latest exploration, in which I’ve discovered things that I’m sure I would already have known if I studied statistics more thoroughly.

My latest worry has to do with the idea of sampling vs. experimenting, and specifically why we are allowed to use techniques that are developed around observational studies – sampling from a large population – for the really-quite-different process of conducting an experiment.

For the sake of this exploration, consider two questions – one sample, one experiment – with the same data.

Inference with difference of two means – sampling from a large population

100 randomly chosen men and 100 randomly chosen women are chosen from the population of all US citizens. They are asked how many hours they spent eating in the last week. The men report an average of 12.2 hours with a standard deviation of 2.1 hours (approximately normal). The women report an average of 13.5 hours with a standard deviation of 2.7 hours. Based on this, what is a 95% confidence interval for the difference between the average amount of time spent eating in the last week between all men and all women?

Inference with a difference of two means – experiment

200 volunteers are split into two groups of 100 each. One group is shown a movie about the value of savoring your meals and meal time, while the other group (the control) is not shown such a movie. Both groups are asked to document the amount of time they spend eating over the next week. At the end, the control group reports an average of 12.2 hours with a standard deviation of 2.1 hours, while the group that say the movie reports an average of 13.5 hours with a standard deviation of 2.6 hours. Based on this, what is a 95% confidence interval for the increase in eating time attributable to the treatment?

The AP exam says these two problems can be done using the same technique – a 2-sample T-test – but I didn’t know why.

Sampling distributions – the basis of our statistical techniques for inference

If you already understand sampling distributions and feel confident t-tests for sampling, skip on down; this material is solidly in the AP Stats curriculum, but I know not every one of my readers is familiar with it. We know in our first problem that the sample difference in the averages was 1.3 hours, but we also know that number would have been a little bigger or smaller if we had gotten a different sample. We need to know how much bigger or smaller it might have been to be able to give a reasonable estimate for the true difference. How do we do that? There are several techniques, but my favorite is simulation.

Imagine just for a moment that my samples were perfect (or nearly perfect). That is, the actual TRUE average eating time last week for men was 12.2 hours and for women was 13.5 hours. To make it even better, imagine those values follow a nice normal (bell) curve. I can use computers to VERY QUICKLY simulate thousands and thousands of imaginary samples of size 100 from each of those populations, find the average difference for every one of those imaginary samples, and look at ALL of those differences. This R code does that.

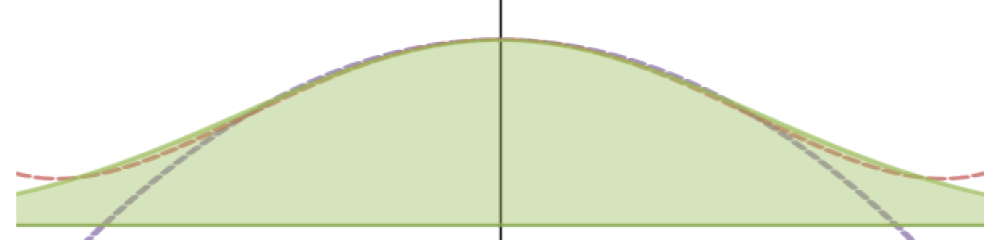

The histogram generated by the R code above is shown below. This is called the sampling distribution for the difference in means for this population and samples of this size.

You can see that this distribution is itself approximately normal in shape. Its mean is 1.3 – the “true” mean my code assumed – and its standard deviation is 0.339. Based on this, it’s reasonable to assume that if the true populations looked very much like our sample, sample differences would usually vary by about 2 standard deviations – or about 2 * 0.339 or about 0.68 hours – from the true difference. Of course, we don’t know our true difference – we only know our sample – but if we assume that the standard deviation at least is about right, we can guess that our 1.3 is probably within about 0.7 hours of the true value, so the true difference between men and women last week is probably between 0.6 and 2.0 hours.

You can see that this distribution is itself approximately normal in shape. Its mean is 1.3 – the “true” mean my code assumed – and its standard deviation is 0.339. Based on this, it’s reasonable to assume that if the true populations looked very much like our sample, sample differences would usually vary by about 2 standard deviations – or about 2 * 0.339 or about 0.68 hours – from the true difference. Of course, we don’t know our true difference – we only know our sample – but if we assume that the standard deviation at least is about right, we can guess that our 1.3 is probably within about 0.7 hours of the true value, so the true difference between men and women last week is probably between 0.6 and 2.0 hours.

There’s more to it than that, and there are various conditions that need to be satisfied to trust this, but everything is built from that sampling distribution shown above.

What about experiments – simulating a randomization distribution

Experiments are fundamentally different from studies. Note in problem 2 above it says “volunteers.” There is generally no sampling in experiments. We have no reason to assume our participants are a random sample of a larger population, so there’s no sampling distribution! So why can’t we trust that the difference we found is exactly right? It comes down to random assignment. Some of the people in our group would have taken longer to eat than others no matter what, and we don’t know what group those natural long-eaters got assigned to. Similar the short-eaters. If we want to isolate how much of the difference was actually caused by the movie, we first need to have an idea of how much sheer luck of assignment might have changed that value.

Imagine the 200 people who participate in the experiment. If we assume that the treatment actually increases (or perhaps decreases) the amount of eating time a particular person would spend, we can simulate an imaginary population of participants. We start by coming up with possible numbers for what they would do if they were in the control group. Then we add numbers to each of them that represent how much the movie would change their time. By generating this data such that means and SD’s for the control answers and treatment answers are similar to those in our sample, we have created a reasonable model for the group. Then we can simulate many thousands of different ways to assign each of them to their treatments, calculate the average difference for each assignment, and make a distribution of those differences. I call this a randomization distribution rather than a sampling distribution – as near as I can tell that’s not areal name, I made it up – but the idea is similar – to get an idea of how much variation might be caused simply by our random action. I used this R code to perform this simulation.

Does this look familiar? It should! It is almost exactly like the sampling distribution, even though it was created very differently! Its mean is 1.3 and its standard deviation is 0.334, essentially the same values the sampling distribution had. Since all of the math for inference tests on samples is based on that sampling distribution, it seems like the same results would work well with this randomization distribution!

And, indeed, that’s my working theory. A randomization distribution for an experiment of this type for means looks so similar to a sampling distribution from similar populations that all the same techniques apply without too much worrying.

Problems with this process

I felt really satisfied about this, but it turns out we’re still way over-simplifying in AP Stats, and possibly encouraging students to do wrong things. Because the randomization distribution doesn’t ALWAYS match the sampling distribution. The simulation above assumed that we could think about things as the treatment increasing how much people will eat. But what about an experiment that compares two completely different options? You give 100 people one type of sleeping pill and they sleep 12.2 hours straight with a SD of 2.2 hours. You give another group of 100 the other type of sleeping pill and they sleep 13.5 hours straight with an SD of 2.7 hours. In this case it might be reasonable to assume that the amount a single person would sleep on one pill is completely independent of how much they might sleep on the other – it could be random! This R code simulates this possibility.

Notice anything different? It can be kind of hard to tell. But the SD of the distributions above was about 0.34. The SD of this distribution is 0.24. Much smaller, even though all the initial data was the same!

Notice anything different? It can be kind of hard to tell. But the SD of the distributions above was about 0.34. The SD of this distribution is 0.24. Much smaller, even though all the initial data was the same!

What does that mean? It means if we use the same statistical techniques in this case as the ones we used above we will create confidence intervals that are WAY wider than we need to, because we’ll be assuming our data varies as above, when really it only varies as shown at left. Much less! Using traditional two-sample statistical methods in this case is quite inaccurate.

Edit: After some more experimenting, I seem to have found a way to correct for this problem in the independence case. In practice, of course, the problem is that we have no idea, usually, which of these cases (or a combo) is actually happening.

This same problem applies to simulation with proportions rather than means, made even worse by the fact that proportions are based on discrete data and so the techniques we use with proportions aren’t particularly reliable to begin with.

Honestly? I think this should be an additional condition for inference on experiments, beyond random assignment and reasonably large treatment sizes. And maybe it is, in some classes! But we certainly seem to ignore this problem in AP Statistics.

One thought on “Using t-tests and proportion z-tests with experiments”